Original Photo creds: The Thinker Sculpture, Vivian Gomes, 2023, San Francisco

Alright folks, by popular demand, I was asked: Can I speak to AI Ethics? And here’s my response: “Let me help y’all wrap your heads around the space. Enough for you to be able to start asking yourself questions, survive a few questions on the space, and ultimately tool you to reflect on where you think the lines should be.”

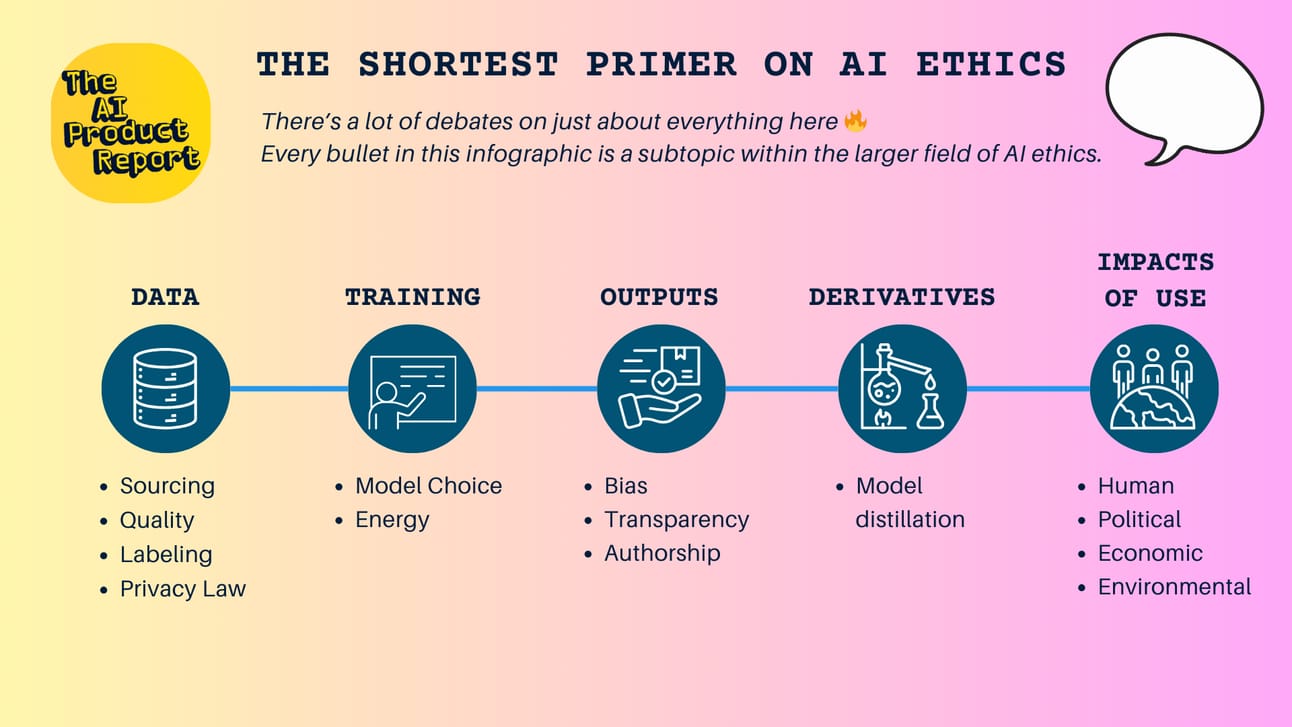

The gameplan here is to give you a rough mapping of what some of the big questions are all along the AI creation ➡️ usage pipeline. I want to make this as digestible and as useful as possible. If the rundown isn’t fast enough for ya, I’ve pulled in the infographic I shared on my LinkedIn summarizing things ever further.

For the readers asking themselves: “Why should I care?”:

Ultimately, caring about AI ethics is essential to building trust in the technology and guiding its global impact to an intentional course. AI is already impacting lives across so many sectors, impacting hiring decisions, and daily choices, making ethics crucial to ensure fairness, transparency, and accountability. Without ethical frameworks, AI systems could perpetuate biases, violate rights, and harm vulnerable populations. As AI reshapes work and society, we need to ensure it’s used responsibly to prevent economic disruption and preserve human values.

For Intrapreneurs, Founders, Creators, Investors, and anyone dabbling: Pin this post and come back to it. You can use this as a baseline checklist to see the rough shape of how ethical your AI project really is.

Sidenote: AI is broad, too broad - so for this writeup I’m including the following in the umbrella:

LLMs

Predictive Neural Network models

Predictive Machine Learning algorithms that back into decision-making projects

Let’s dive in!

ETHICAL QUESTIONS ALONG THE AI CREATION TO USE PIPELINE

Data

(Comes in many shapes and sizes: Text, Images, Audio, Video, etc...)

Sourcing - Where do you get your data to train your model?

Are you scraping your data from specific sources?

Well this is a whole beehive of ethical questions. Someone forcibly owns the content you're taking.

Are you taking data from the Public Domain?

“What is reasonable public domain?” versus “What is explicitly stated as part of the public domain?”

Are you using data that’s covered by copyright protections in the registered jurisdiction?

There’s a likelihood that your AI models produced off of this kind of data are definitely infringing on quite a few laws.

Are you infringing on any privacy laws to gather the data you need? Are you using data that’s been leaked as part of a data breach?

Quality

Are you collecting factual data?

Is your scraper aimed at the top 10 Google results for a topic?

Is the source of your data trustworthy?

Can the data you gathered be acquired from various sources and evaluated on a consensus basis between the various sources that it’s valid?

Labelling

Whatever the amount of data labeling/annotation required for your purposes, it's grueling work to annotate data - since it’s data at scale that’s needed for quite a few models to start yielding material results. This threshold of “how much data is enough data?” does depend on a model-per-model basis

Are the people labeling the data paid a fair wage?

Fair wage should be determined based on what variable? Time worked? Label volume? Accuracy of their labeling?

Say it costs 20cents per label. At scale it means a Model built off a data pool of 5 Million data rows cost ~1 million to make. If a company were to productize that model and resell it to individuals at 20$ per seat/month, would this wage be considered fair for the value of the work provided?

Are they performing their work in good, humane, conditions?

Are there support frameworks in place for the labelers to consistently label data correctly?

Are these labelers carrying inherent biases that will impact their ability to produce labels? Yes. How is this being mitigated for?

Are the labelers inherently qualified to be handling the data presented to them?

Say you’re outsourcing your data annotation via a service provider:

When it comes to crowdsourcing your data annotation, Captchas are a way to verify that users are human. Most of the time these same humans end up performing some kind of task that bots “shouldn’t” be able to do.

Well these small tasks that let us pass the internet bridge troll and onto whatever website we’re visiting are captured. Was gaining the “right of passage” to visit a website free labor?

International law

Privacy: In addition to sourcing data, the privacy implications of using AI and data collection practices could be expanded upon.

Bias in Data

Your sourcing population may be skewed in ways you don’t expect.

Have you audited your data for various biases?

Your data may hold some inherent biases based on how it was captured, your client base, geography, or political climate.

Here’s a 7 year old Google video explaining it better than me:

Training

Can a model trained off of “unethical” data be itself ethical?

What about your Open source LLM of choice: some might not be as ethical as you might think due to its underlying data.

What behavior are you training for your model?

Some of it is data dependent, but depending on your case, your training may come down to choosing a bias that suits your purposes better.

I.e. Self-driving cars choosing to swerve into another car rather than the pedestrian on a collision course with.

Output

Bias in Output: Data bias, but distinguishing between the bias inherent in the data versus bias that emerges in model outputs may be worth exploring in more depth.

nondeterministic

ownership of the output products

transparency

AI Model Distillation & Model Optimization

When it comes down to it, is your source model ethically assembled in the first place?

How did you obtain the larger model that you’re in need to optimize/distill? If you have managed to get your hands on a larger proprietary model, it becomes ethically dubious to be using this model as a source to build a training dataset.

This begs the further question, if you have any intent beyond private use for this distillation (Making yours commercially usable, or even opening it as an open source project): Have you functionally stolen the secret sauce that someone else has put together, on your way to developing your own spin on their flavor?

I.e. Have you produced the “Large Mac” after studying McDonald’s Big Mac?

Impact of Use

It all comes down to the externalities that spin out of this pipeline

Human

What kind of human impact do you foresee this technology having?

Does it fundamentally endanger humans?

Is your tech involved in causing human harm?

Can this application be misused? What are guardrails on misuse that you have in place to prevent it from happening?

Who is responsible for the outcomes of AI decisions?

How do you ensure the AI system is explainable?

How does this technology impact human decision-making and autonomy?

Does it reduce human agency?

Economic

What is the application that justifies your use of AI over normal software?

What is the potential fallout of your solution on X other workers?

E.g. Writers, and other artists impacted by the use of AI in creative industries.

Are you considering the ethical implications of automation and job displacement?

Environmental

Modern tech is poorly energy-optimized at scale. How can you calculate the tradeoff between the value created by the solution, its training, and its ongoing recurring use?

In your perspective, does this solution’s value outweigh the environmental energy cost that is spent on training and on use? Can you mitigate this impact somehow?which is outsized consumption when compared to the consumption of energy for running a very simple program to do defined tasks.

Politics in AI.

Internet vs Border jurisdictions

Privacy laws

Censorship

Standards Organizations

NIST & EU AI acts classification of technology impact on the real world and risk to human life and fundamental rights within a nation

Some major players are putting forward their takes on AI ethics since many are expecting AGI to be coming sooner than later.

Does this proposed Manifesto on Ethical Copyrights reach too far? Should there be some kind of trust network for identifying responsible AI users? Tell me in the comments

Closing Opinion

I think there’s a way forward for AI as a whole. The ethical line in the sand will need to be redrawn, and by that admission alone, we’ll undoubtedly face much more turmoil before we reach any resolution. But I believe that through collaboration, a firm commitment to doing the right thing, and a shared sense of responsibility, we can guide the growth of this technology in a direction that benefits as many as possible. As messy and complicated as it may be, it’s only through facing these challenges together that our global society can truly harness the potential of AI and ensure its positive impact for generations to come.

The future continues to be weird, yes, but it's also brimming with possibilities.

Sam from The AI Product Report

Want to talk about this longer? Need more customized help on the matter? Email me [email protected] don’t be shy, let’s talk.

I know there are a LOT of other AI-Product topics to cover like feedback loops and Agents+Agentics, so let me know if that’s something you want to see discussed!

Here’s an anonymous channel for you to send me your thoughts if the comments section isn’t for you! 😉